Infrastructure

All the infrastructure is running on AlmaLinux 9, which is a community owned and governed, forever-free enterprise Linux distribution, focused on long-term stability, providing a robust production-grade platform which is binary compatible with Red Hat Enterprise Linux (RHEL). More information on:

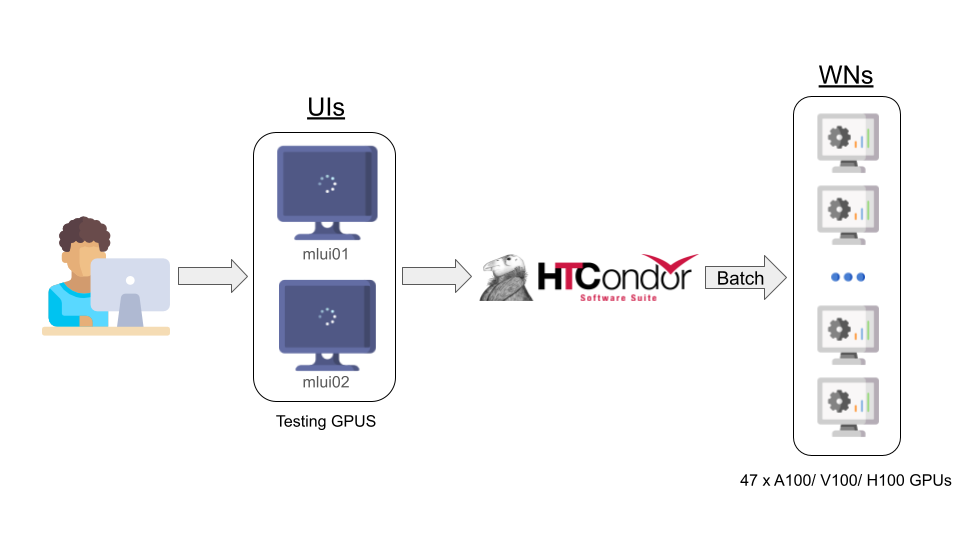

The hardware nodes of the infrastructure are divided in three classes:

User Interfaces (UI) : the entry points for users, also provide a working environment to compile and test their programs. These machines have GPUs and access to the storage services. These nodes are aimed for testing, so they have limited computing time and power. When the jobs are ready, users can submit their jobs to Worker Nodes in batch mode.

Worker Nodes (WN): where the batch user jobs are executed. Contain high-end CPUs, larger memory configuration and nodes with up to 8 high-end GPGPUs. These nodes allow more computing time and power.

Storage Nodes(SN): disk servers to store user and project data, accessible from both User Interfaces and Worker Nodes.

System Overview

Artemisa’s is equipped with:

User interfaces:

- 1 x User Interface (

mlui01.ific.uv.es) with:

- 1 x User Interface (

mlui02.ific.uv.es) with:Worker nodes:

- 11 x worker node with:

- 1 x worker node with:

- 2 x worker node with:

- 20 x worker node with:

- 1 x worker node with:

- 2 x worker node with:

Disk servers:

- 3 x disk server with:

- 5 x disk server with:

Networking:

All nodes have a 10 Gbps ethernet connection to the IFIC’s data center network.